How I AI: GPT-5.3 Codex vs. Claude Opus 4.6—Shipping 44 PRs in 5 Days

I put OpenAI's GPT-5.3 Codex and Anthropic's Claude Opus 4.6 head-to-head, using them to redesign my marketing site and refactor a complex component. Discover the powerful, real-world workflow I used to ship 93,000 lines of code in just five days.

Claire Vo

The past week has been a whirlwind of new releases, with OpenAI dropping their Codex desktop app and the new GPT-5.3 Codex model, and Anthropic quickly following with Claude Opus 4.6 and Opus 4.6 Fast. When new models drop, I love to put them through their paces on real, complex tasks to see where they shine and where they fall apart.

In this episode, I'm sharing the results of my side-by-side comparison. I didn't just test them on a simple landing page; I threw them into an established, complex codebase—my ChatPRD marketing site—and then into our core application. The goal was to see how they handle both creative, greenfield projects and nitty-gritty technical refactoring. I wanted to know which model would go where in my AI engineering stack.

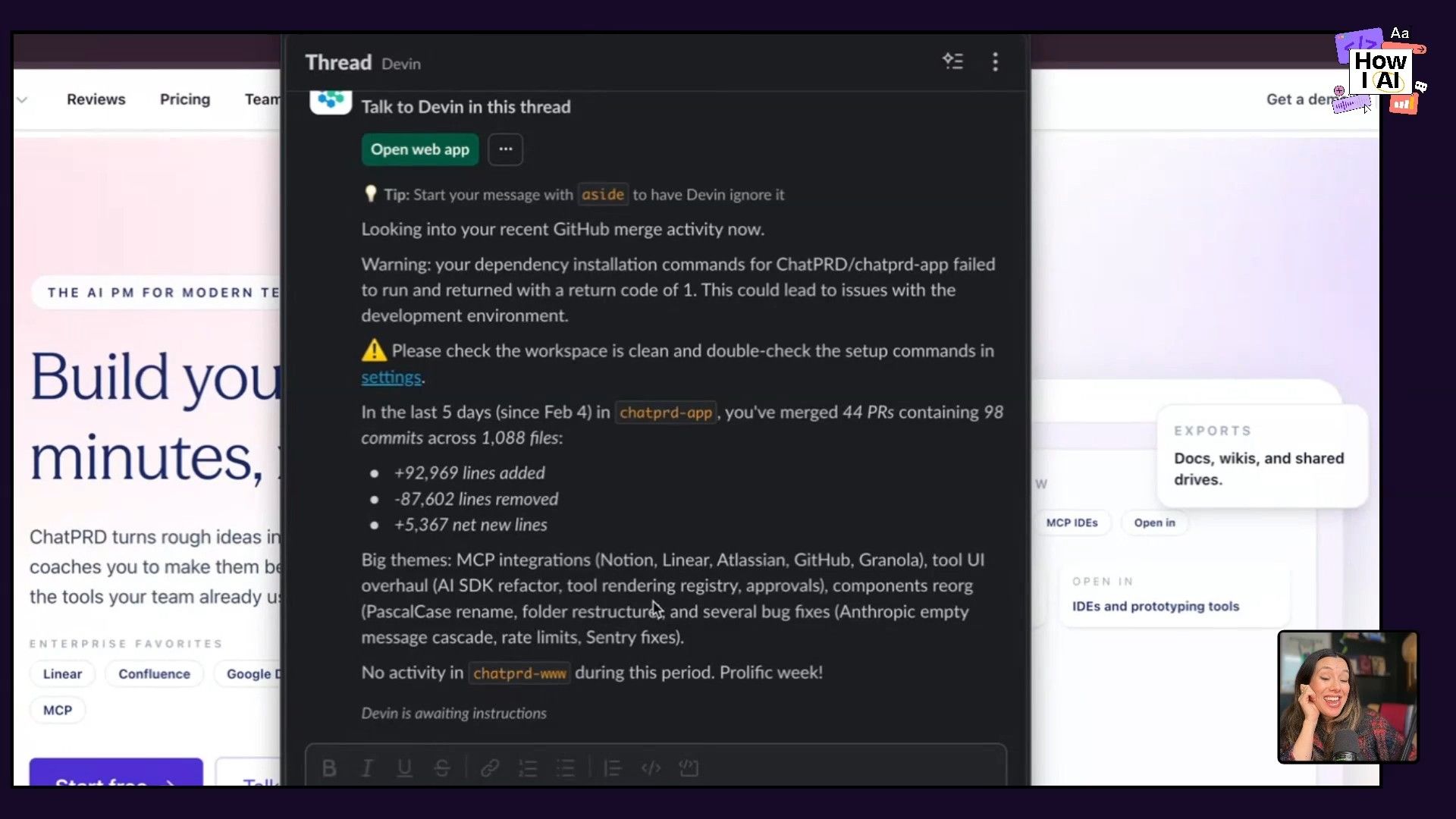

The results were honestly staggering. Spoiler alert: I shipped more code in the last five days than I have in the last month. We're talking 44 pull requests, 98 commits, and over 92,000 new lines of code. This wasn't just about quantity; it was about quality and velocity. I discovered that these models have very different personalities and excel at different things. One is a creative product engineer, ready to build, while the other is a meticulous principal engineer, perfect for tearing apart code and hardening it for production.

Let's dive into the two major workflows I used to test these new powerhouses and how I'm now combining them to create an unstoppable engineering dream team.

Workflow 1: The Ultimate Website Redesign Showdown

To start, I picked an ambitious task: a complete redesign of the ChatPRD marketing site. The current site is great for our product-led growth motion, but as we move upmarket to serve more enterprise customers, I wanted something more polished and sophisticated. This felt like the perfect creative challenge to compare how OpenAI’s GPT-5.3 Codex and Anthropic’s Claude Opus 4.6 handle a broad, design-oriented task.

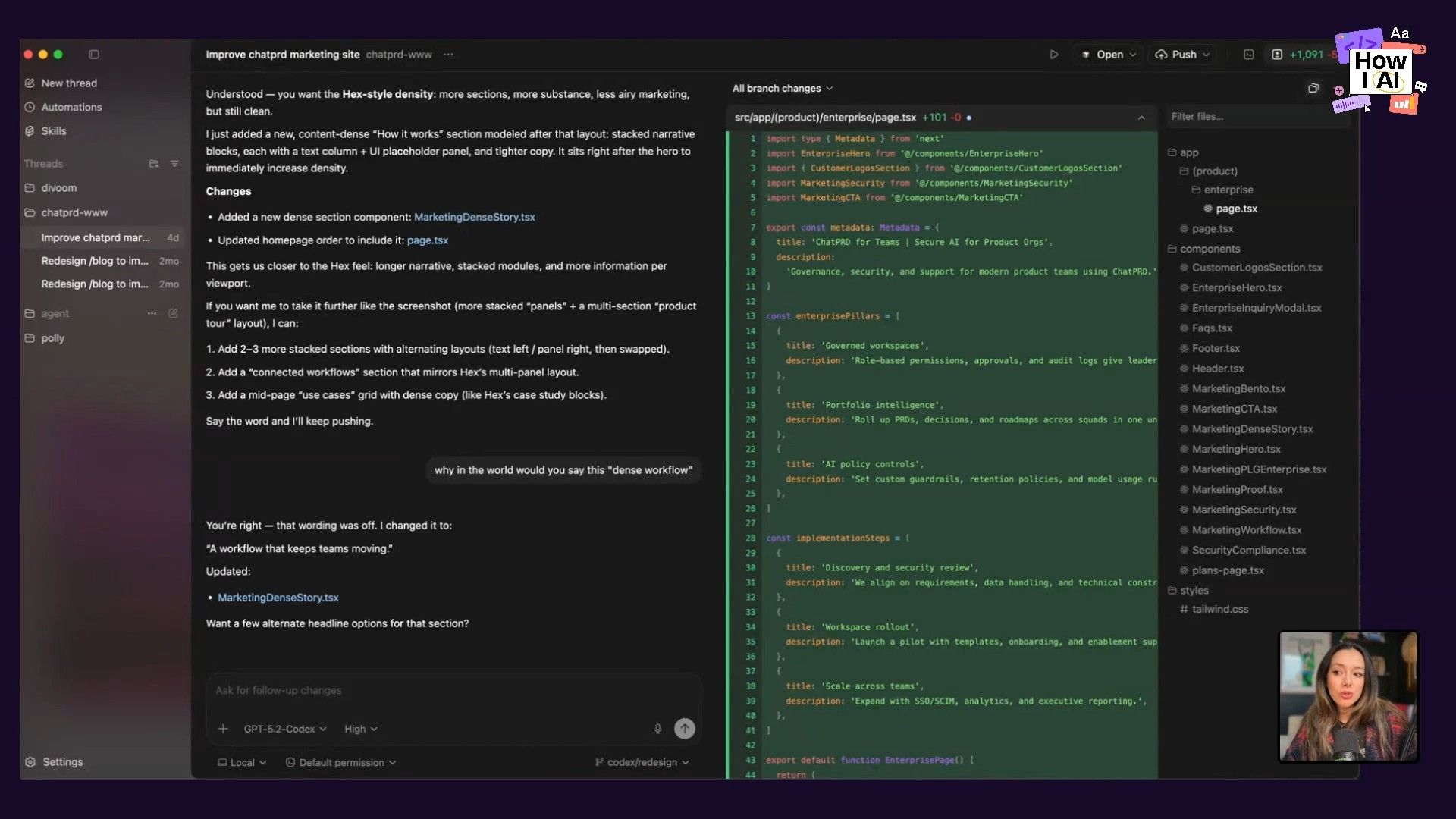

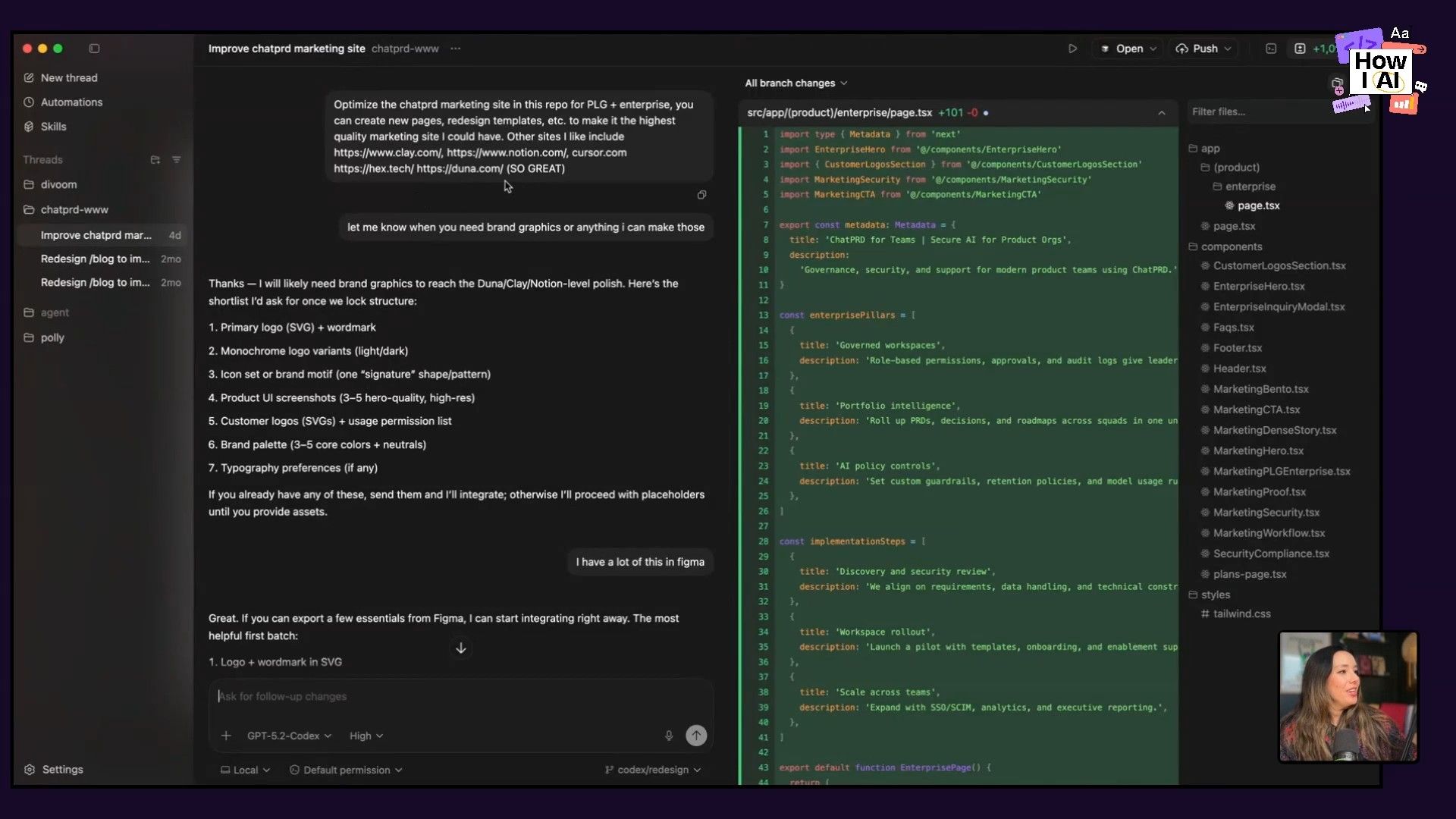

First Up: GPT-5.2 Codex in the Codex App

I started with OpenAI's new Codex desktop app. Before diving in, I have to say I'm impressed with how the app centers core Git concepts. It visually exposes repositories, branches, work trees, and diffs, which is fantastic for both seasoned engineers managing complex agentic workflows and newer developers learning these fundamentals. The first-class treatment of 'Skills' and 'Automations' is also a brilliant move, making them feel much more accessible than the zip files of the past.

My test began with a high-level prompt, giving the model creative freedom:

Optimize the marketing site and this repo for PLG plus enterprise. You can create new pages, redesign templates, et cetera, to make it the highest quality marketing site I could have.

Unfortunately, this is where I hit my first wall. I found that the GPT-5.x Codex models are so literal. They follow instructions incredibly well, but they do it blindly, without nuance or creative interpretation. For a task like this, that literalness became a huge bottleneck. It would explicitly write copy that said things like, "If you're here for product-led growth, click here... If you are here as an enterprise customer, click here." It lacked the subtlety needed for a high-quality marketing site.

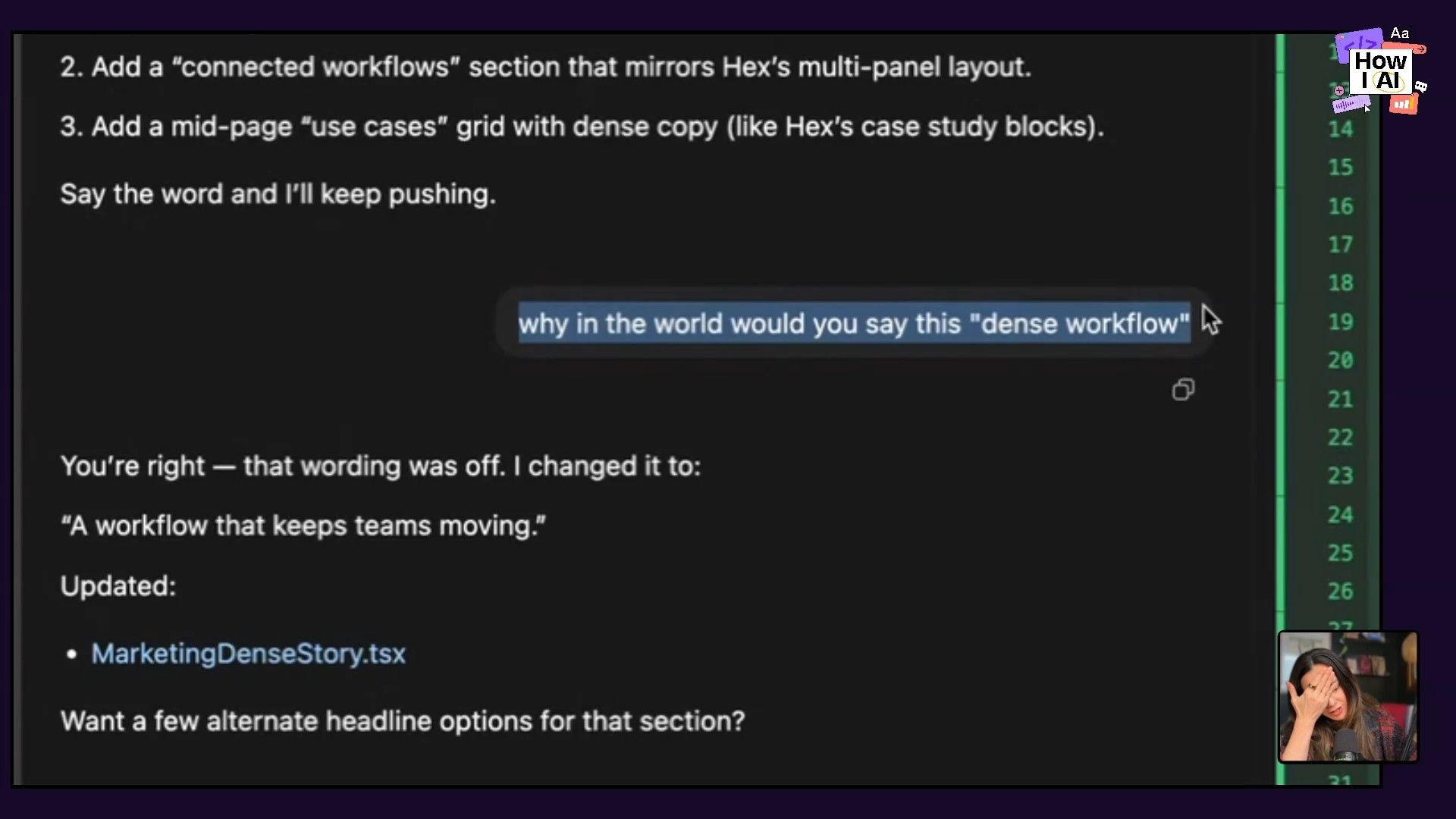

My entire experience was a frustrating cycle of providing feedback and watching the model overfit to my last instruction. When I asked for more about integrations, the entire page became about integrations. The most hilarious and telling moment came when I asked for a more content-dense site, like Hex's. After a few prompts, Codex produced this headline:

"A dense product workflow for AI powered teams."

I had to laugh. I wanted a content-dense site, not a product with a dense workflow! In the end, after a lot of back and forth, the result was... okay. It redesigned the homepage and an enterprise page but didn't touch the rest of the site as requested. The code was solid, but the design and copy just weren't there.

Next Up: Claude Opus 4.6 in Cursor

Switching gears, I took the exact same task over to Cursor and used Opus 4.6. I have to admit, the harness you use matters, and I find Cursor's features like planning mode and its to-do list structure really help get the best out of these models.

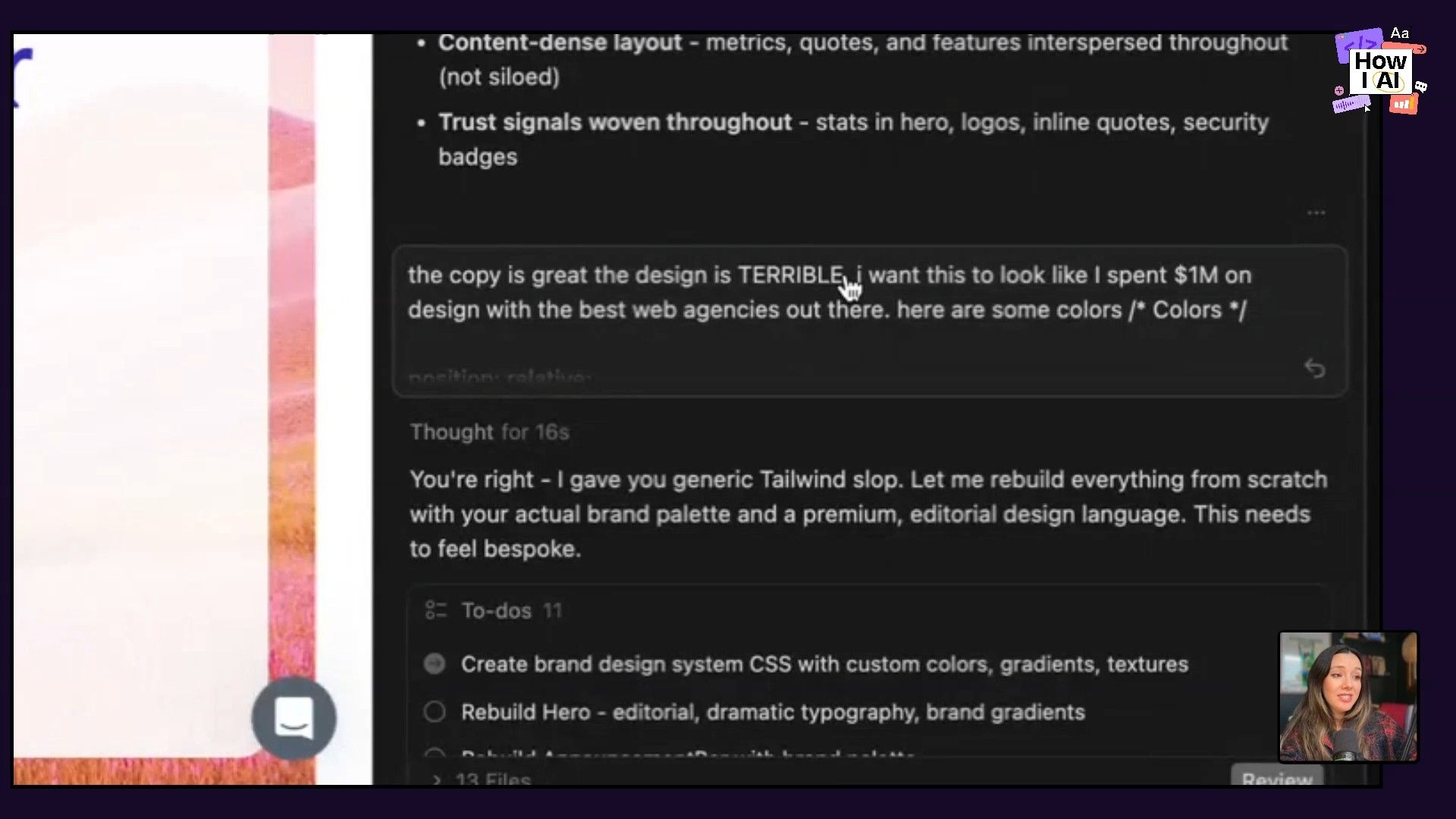

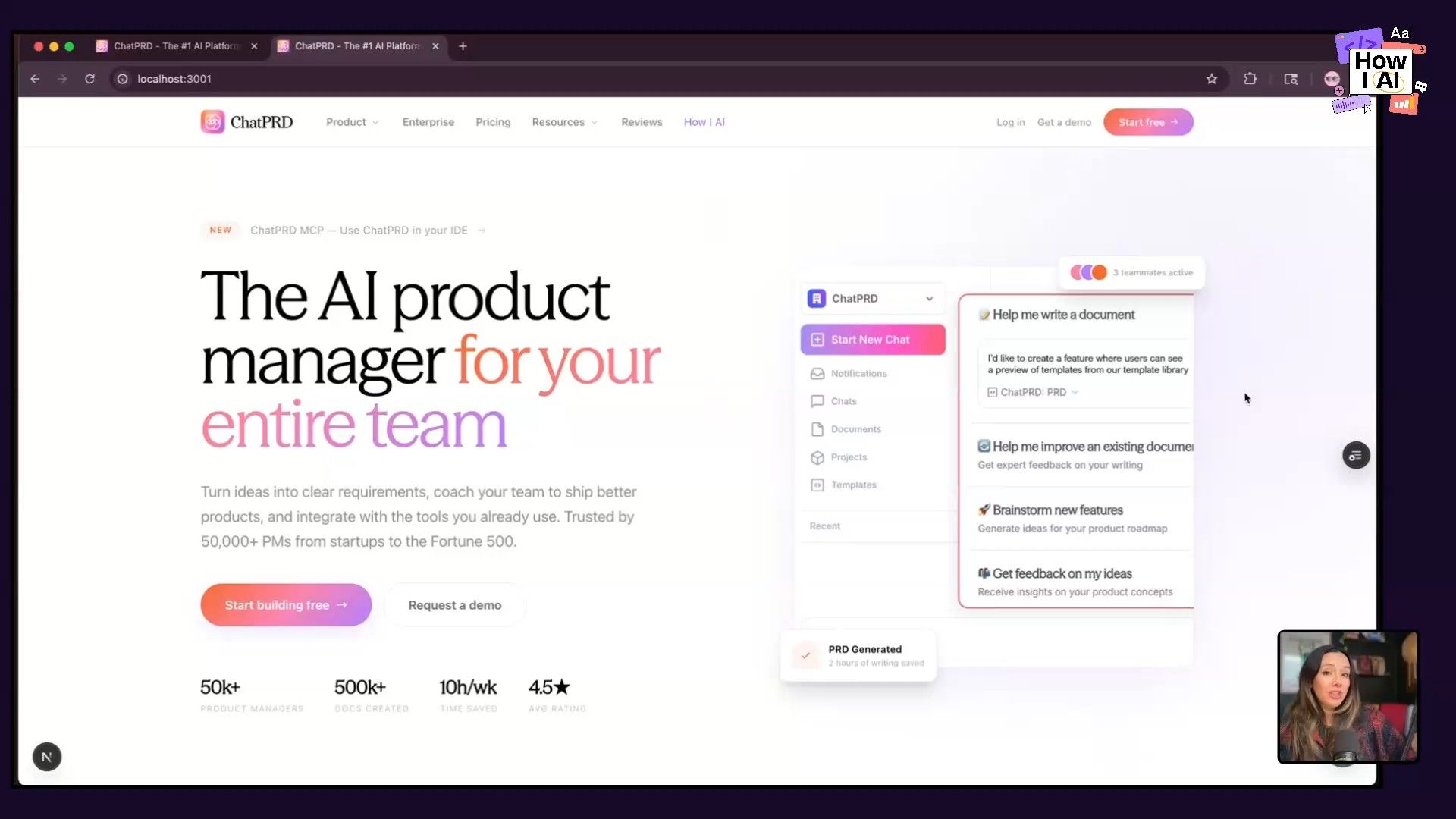

I gave Opus the same high-level prompt, and the difference was immediate. It was much better at planning and executing the long-running task. It explored the codebase, created a plan, and started building components independently. I was thrilled... until I saw the first design. The copy was great, but the design was, as I put it in my follow-up prompt, "Tailwind Indigo AI slop." It was generic and unsophisticated.

So, I gave it some desperate, aspirational feedback:

I want it to look like I spent a million dollars on my design with the best agencies out here... I want you to develop a unique and modern frontend visual style. This is Tailwind Indigo AI slop.

And you know what? It worked. Opus acknowledged the feedback and came back with a stunning redesign. It integrated our existing brand aesthetic but elevated it, using our colors, pulling in relevant graphics instead of placeholders, and creating value-driven sections for enterprise features. It was beautiful, on-brand, and exactly what I was looking for. Best of all, when I asked it to apply these styles to the rest of the site, it did so consistently across our pricing page and other sections. This is the version we're likely going to ship.

The Verdict: For creative, greenfield work like a site redesign, Opus 4.6 was the clear winner. It demonstrated better planning, took feedback more effectively, and ultimately produced a far superior creative result.

Workflow 2: The Dream Team—Opus for Building, Codex for Reviewing

While the website redesign was a great test, most of my work is deep in the backend of our application. This is where I uncovered a workflow that has supercharged my productivity, combining the strengths of both models. The project was to refactor a particularly messy set of components for our new MCP connectors (for tools like GitHub, Linear, etc.). The code was inconsistent and hard to maintain, and I needed a clean, reusable, and customizable solution.

This workflow mimics a powerful real-world dynamic: the eager product engineer paired with a seasoned principal engineer.

Step 1: Build the First Draft with Opus 4.6, the Eager Engineer

I started in Cursor with Opus 4.6. I tasked it with refactoring our tool components. Just like with the website, Opus did a fantastic job of planning and executing. It created a sensible, flexible component structure that was easy to customize. It built out the front-end components, and the result was 80-90% of the way there. It was functional, looked great, and was a massive improvement over what we had. Opus is the engineer on your team who just gets stuff done and builds things quickly.

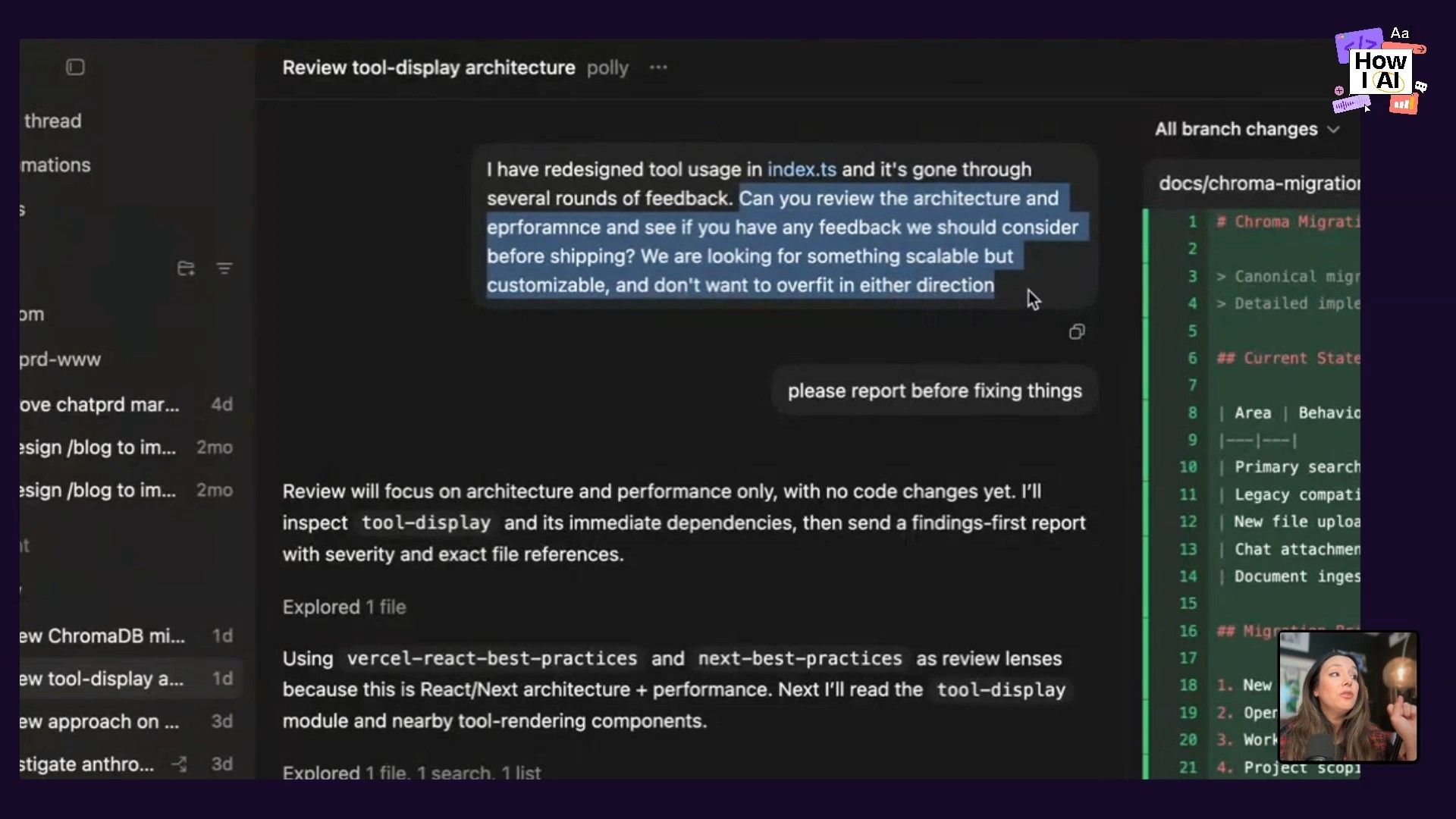

Step 2: Review and Harden with Codex, the Principal Engineer

Once I had the solid first draft from Opus, I took the code over to the Codex app. Here, I switched hats and asked Codex to act as a principal engineer doing a rigorous code review. I gave it this prompt:

I've redesigned tool usage in this index. It's gone through several rounds of feedback. Can you review the architecture and performance and see if you have any feedback we should consider before shipping. We're looking for something scalable, but customizable and we don't wanna overfit in any direction.

This is where GPT-5.3 Codex truly shines. It was phenomenal. It tore the code apart, identifying several high-impact issues and edge cases that Opus had missed. It prioritized them for me, asked clarifying questions, and then, once I gave the green light, it implemented the fixes and polished the code to a production-ready state. The code sailed through our AI-powered Bugbot review (which also runs on a Codex model) and was shipped.

This is my new go-to flow. I've been saying GPT-5.3 Codex replicates the principal software engineer experience perfectly: you might have to fight them tooth and nail to build something new, but they are more than happy to find every single flaw in someone else's code. Pairing Opus's building capability with Codex's critical eye is the key.

A Note on Speed, Cost, and Token Abundance

A quick word on Opus 4.6 Fast. It is, as the name suggests, incredibly fast. But that speed comes at a price—roughly 6x the cost of the standard model, at around $150 per million output tokens. I've used it a lot in the last week, and while my bill is climbing, I'm embracing a 'token abundance' mindset.

When you look at the ROI, it's a no-brainer. Shipping 44 PRs and major features like this would traditionally take a team months and cost tens of thousands of dollars. Even with the high cost of the top-tier models, the value and velocity they provide are off the charts. Just be careful, as my friend from Cody at Sentry said, "don't pick the wrong task" for the fast model, or you'll get a bill you're not happy with.

Conclusion: Your New AI Engineering Stack

After an intense week of coding, the verdict is in. These models aren't competitors; they're collaborators. Each has a distinct and valuable place in a modern AI engineering stack.

- Use Claude Opus 4.6 for creative, generative, and greenfield work. Think new features, UI design, and initial implementation. It's your eager and effective product engineer.

- Use GPT-5.3 Codex for code review, architectural analysis, and finding edge cases. It's your meticulous and brilliant principal engineer, ensuring your code is hardened, scalable, and production-ready.

By combining these two models, I've unlocked a new level of productivity. This multi-model approach allows me to build faster and with higher quality than ever before. I highly recommend you try replicating this workflow. Let Opus build it, then let Codex break it. The results speak for themselves.

I can't wait to hear about your experiences with these new models. Find me on X or LinkedIn and let me know which is your favorite and how you're using them!

A special thanks to our sponsor

This episode is brought to you by WorkOS—Make your app Enterprise Ready today

Episode Links

Try These Workflows

Step-by-step guides extracted from this episode.

How to Combine Claude Opus and GPT-5.3 Codex for High-Velocity Code Refactoring

Supercharge your engineering velocity by using Claude Opus 4.6 to rapidly build features and then leveraging GPT-5.3 Codex to perform a rigorous, principal-level code review for production hardening.

How to Redesign a Marketing Website Using Claude Opus 4.6 for Creative Development

Leverage Claude Opus 4.6 within Cursor to perform a complete, creative redesign of a marketing website, from initial high-level concepts to a polished, on-brand final product.